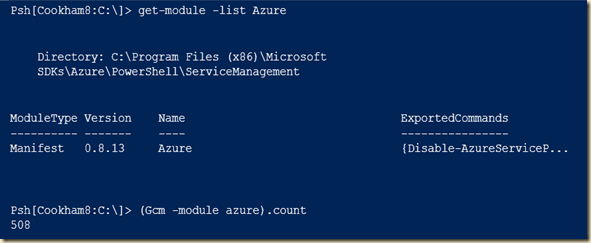

I've been playing for the past few weeks with Azure VMs. I can, obviously, do most things from the GUI, but somehow, as a PowerShell guy, that just seems wrong! So I've been honing up my PowerShell Skills. The Azure module contains over 500 commands. In the version on my home workstation, there are 509 commands, of which 35 are aliases.

As an aside, those aliases are use to alias the old 'windows azure pack' cmdlets which have in effect been replaced with newer cmdlets. For example, the old Get-WAPackSubscription is now an alias for Get-AzureSubscription. This is a great idea for helping to ensure forwards compatibility. I suspect we'll see more of it. For those of you with older Windows Azure Pack based scripts – you may consider upgrading those to use the new Azure Module native cmdlets.

The first thing you have to know about Azure VMs is that, while they are in the end 'just' a Hyper-V VM, they are a bit more complex than on-premise VMs. There are several Azure features, including storage accounts, images, endpoints, locations, and azure services to consider).

In azure, each VM runs within an Azure service. I like to think of the Azure service as the load balancer. In the simplest case of a single VM, you have a VM and the service and use the name and IP of the service to access the VM. The Service also allows you to provide more instances of the VM in an lbfo fashion. But today, I just want to create a simple VM. The VM I am creating runs inside a new Azure service of the same name as the VM

To create an Azure VM, you have two main options: use and Azure VM Image as the starting point for your VM, or create the VM on premise, and ship it's VHD up to azure and then create a VM using that uploaded VHD. In this blog post, I'll concentrate on the first, leaving the second for another day.

A VM image is a sysprepped operating system image often with additions and customisations. The image was built from some base OS with some changes possibly made. You can get simple base OS images – in effect what would be on the product DVD. Others have been customised, some heavily. Azure images come from a host of places, including Microsoft and the family. Once the reference Image is created, the creator then prepares.

You can easily create a VM from an existing VM image. To see the VM images in Azure, you simply use Get-AzureVMImage. As of writing there are 438 images. Of these, 187 are Linux based and 251 Windows based. A given image is in one and typically all Azure locations. An image belongs to an image family and has a unique image name. With 125 image families to chose from finding your image (and it's specific image name is based on Get-AzureVmImage and piping the output to your normal PowerShell toolset!

One suggestion if you are experimenting. Doing a Get-AzureVMImage call takes a while as you are going out to the internet (in my case behind a slow adsl line) is to save the images to a variable, then pipe the variable to where/group/sort/select thus avoiding the round trip up to the azure data center.

Today, I just want to create an image of Windows Server 2012 R2 Datacenter. So to find the image I do this:

Next, in creating the Azure VM, you need an Azure Storage Storage Account in which you are going to store the VHD for your VM. The VHD starts off as, in effect, a copy of the image (i.e. a syspreped version of Windows) stored in Azure Storage VM. You can pre-create the storage account, although in this case, I let the cmdlets build the storage account for me.

So here's the script:

# Create-Azurevm.ps1

# This script creates an azure VM

# Set Values

# VM Label we are looking for

$label = 'Windows Server 2012 R2 Datacenter, December 2014'

#vm and vmservice names

$vmname = 'psh1'

$vmservicename = 'psh1'

# vm admin user and username

$vmusername = 'tfl'

$vmpassword = '~+aQ8$3£-4'

# instance size and location

$vminstancesize = 'Basic_A3'

$vmlocation = 'West Europe'

# Next, create a credential for the VM

$Username = "$vmname\$vmusername"

$Password = ConvertTo-SecureString $vmpassword -AsPlainText -Force

$VMcred = new-object system.management.automation.PSCredential $username,$Password

# Get all Azure VM images

$imgs = get-AzureVMImage

# Then get the latest image's image name

$img = $imgs| where label -eq $label

$imgnm = $img.imagename

# OK - do it and create the VM

New-AzureVMConfig -Name $vmname -Instance $vminstancesize -Image $imgnm `

| Add-AzureProvisioningConfig -Windows -AdminUser'tfl' -Pass $vmpassword `

| New-AzureVM -ServiceName 'psh1' -Location $vmlocation

Once the VM is created, you can then start and use it. Having said that, there are some pre-requisites, like setting up end points and enabling remote management inside the VM. I'll cover these topics in later blog posts.

del.icio.us Tags:

Azure VM,

PowerShell